This post took a while to see the daylight. I was working on my code submission for Azure Search for Search contest and there were tons of things to catch up on. However, if you are a Windows Workflows developer and are working out ways to host your workflows on Azure or want to transfer control of business logic to your clients using Workflows, I will make up for the delay. Let’s get started!!

Workflows can be broadly categorized as durable or non durable. Durable Workflow Services are inherently long running, persist their state, and use correlation for follow-on activities. Non-durable Workflows are stateless, effectively they start and run to completion in a single burst.

Non-durable Workflows are readily supported by Windows Azure with a few configuration changes. However, if you want to host Durable Workflows in Azure you have a few options:

- Use Logic Apps to build your workflows. (Requires rewriting of workflows)

- Use Workflow Manager. (Not updated for a while. Uses SQL databases to store persistence data)

- Enjoy total control over persistence and tracking by building your own workflow host. (Use low cost Azure table storage to store tracking and persistence data)

What We Need to Build?

The big buckets of functionality required to host durable Workflow Services are:

- Monitoring store: You would need to implement your own tracking participant to store tracking information in Azure Table storage.

- Instance Store: You would need to implement your own instance store that would take care of persisting your workflow state when your workflow wants to e.g. bookmarking.

- Reliability: We will use an Azure SB Queue to accept input to trigger workflows. This mechanism would ensure that our Workflows are scalable and reliable.

Need Code?

As always the sample that I have used is available for download here.

I post all code samples on my GitHub account for you to use.

What We Will Build

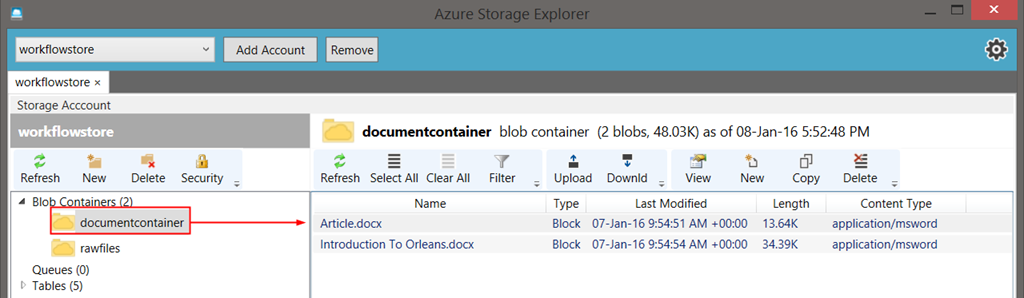

We will build a very simple workflow host that can trigger a workflow on receiving a message from a Service Bus queue. We will build an instance store that will take care of saving the state of workflows and help hydrate a workflow when a bookmark is resumed. Next, we will test this infrastructure by building a simple workflow with two activities. The first code activity will parse an Azure storage container and list all blobs inside the container. It will then bookmark its progress. The next activity will work on this list of blobs and copy all document files (*.docx) from the source container to the target container.

Let’s Get Down To Business

I would not be able to demonstrate writing the whole code here. Therefore, I propose that you download the sample and let me walk you through the code.

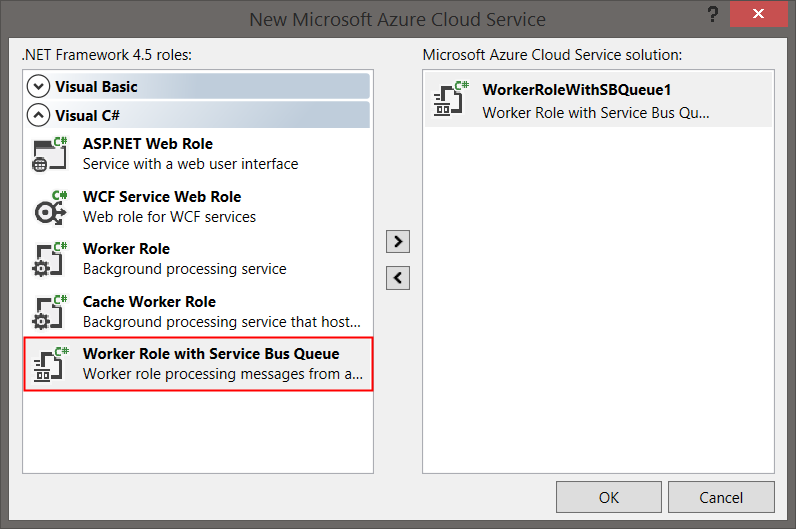

- Create a Cloud Project, select Azure Cloud Service template and then select Worker Role with Service Bus Queue Template. This is the template that I used in WorkflowOnAzure cloud service project. Now, I will walk you through the other projects in the solution.

We will start with exploring the important entities (in the Entities folder).

ChannelData: This class represents the input argument that the workflow expects.ChannelData.Payload.ItemListwill contain the names of all blobs present inside the source container.ChannelData.Payload.PersistentPayloadwill contain all the configurations (such as source container name) required by the workflow.ChannelData.Payload.WrokflowIdentifierwill contain the GUID name of workflow instance so that we can identify the various instances of a workflow.HostQueueMessage: This is the schema of message that would be sent to Service Bus queue by the test client to trigger a new workflow or by the workflow host in case of unloading a workflow after its state has been persisted through bookmark.InstanceData: This is the schema of data that would be stored in Azure Table Storage which is the Instance Store of the workflow.Next, let’s go through the contents of the DataStorage folder.

AzureTableStorageRepository: This class contains functions to perform CRUD operations on Azure Table Storage.AzureTableStorageAssist: This class contains helper functions forAzureTableStorageRepository.Next, let’s see what resides in the Utilities folder.

The Async classes are used by

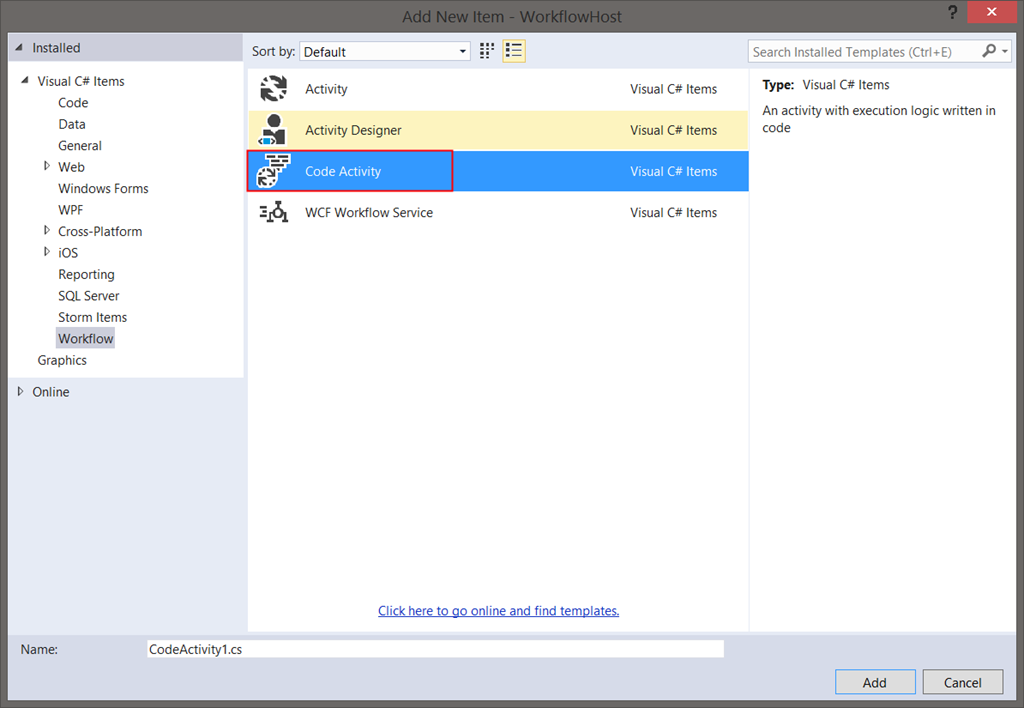

UnstructuredStorageInstanceStoreclass which derives fromInstanceStoreclass.UnstructuredStorageInstanceStoreimplements Azure Table Storage based Instance Store for the workflow host.Routines: Contains helper functions for the project.SynchronousSynchronizationContext: Makes the workflows running on workflow host work synchronously.TypeSwitch: A utility used byAzureTableStorageRepositoryto convert .net data types to Entity Data Model types and vice versa.The Workflow folder contains a simple workflow with two code activities. To create a code activity, select Add > New Item… > Workflow > Code Activity. Note that to support bookmarks, your code activity should derive from

NativeActivity.

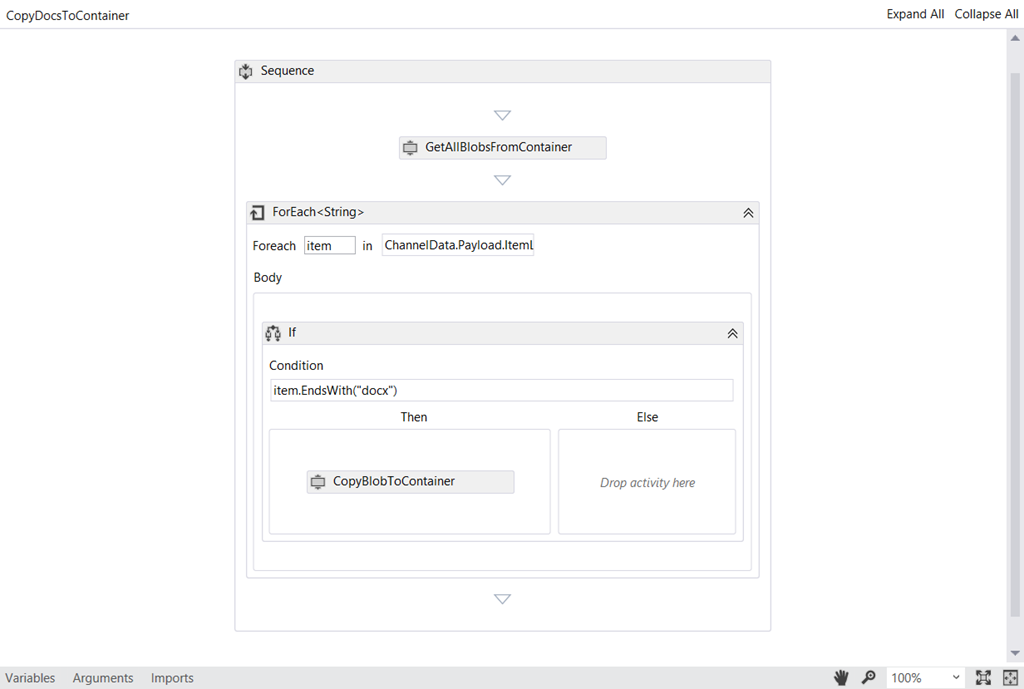

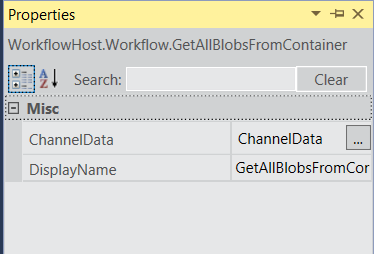

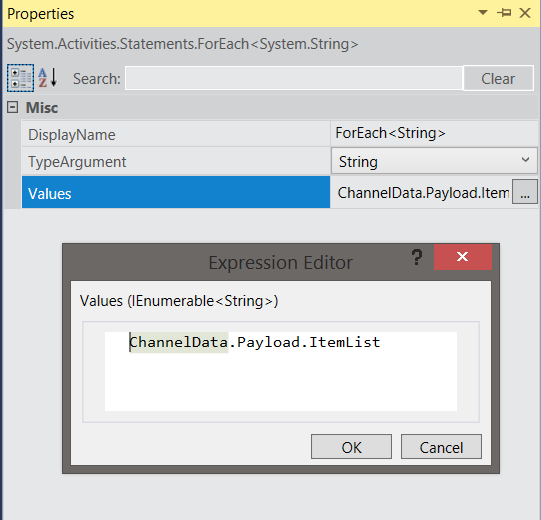

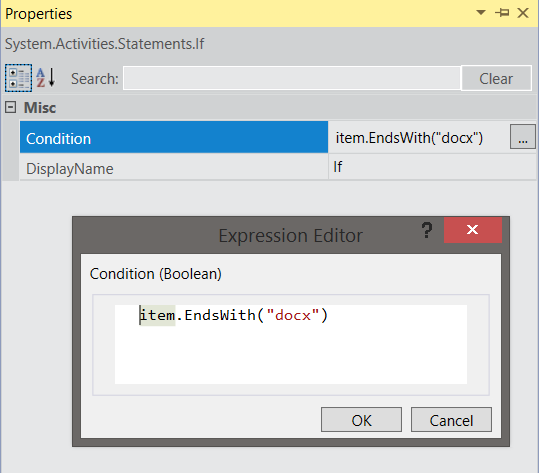

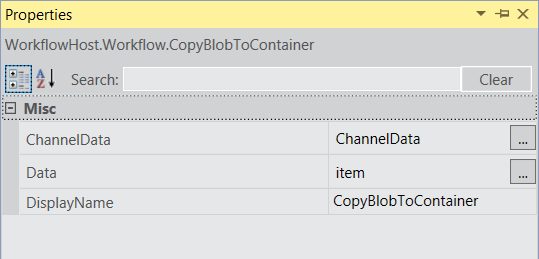

GetAllBlobsFromContainer: This activity acceptsChannelDataas input and output argument. This data would be passed as an argument to the workflow that contains this activity by the workflow host. The workflow would in turn pass the data to this activity. The code inside the this activity is self explanatory. It just gets the list of block blobs inside the source container and adds it toItemListproperty of the argument that it received as input. Finally, it bookmarks its state so that when the bookmark resumes, the workflow would not execute this activity again but would rather execute the subsequent activities in the workflow without losing the state.CopyBlobToContainer: This activity acceptsChannelDataand a single string representing name of blob as input. The rest of the code just copies the blob from the source container to the target container.CopyDocsToContainer: This activity is made up of the above code activities. To create this activity, select Add > New Item… > Workflow > Activity. In the designer pane, drag a Sequence from the Toolbox and drop it on the designer. Next, add theGetAllBlobsFromContaineractivity. Attach it to aForEachactivity to go through the list of blobs you received fromGetAllBlobsFromContaineractivity. Next, drop anIfactivity to check whether the blob name ends with “docx” extension. Drag and dropCopyBlobToContaineractivity in the “then” box. The end result should look like the following.

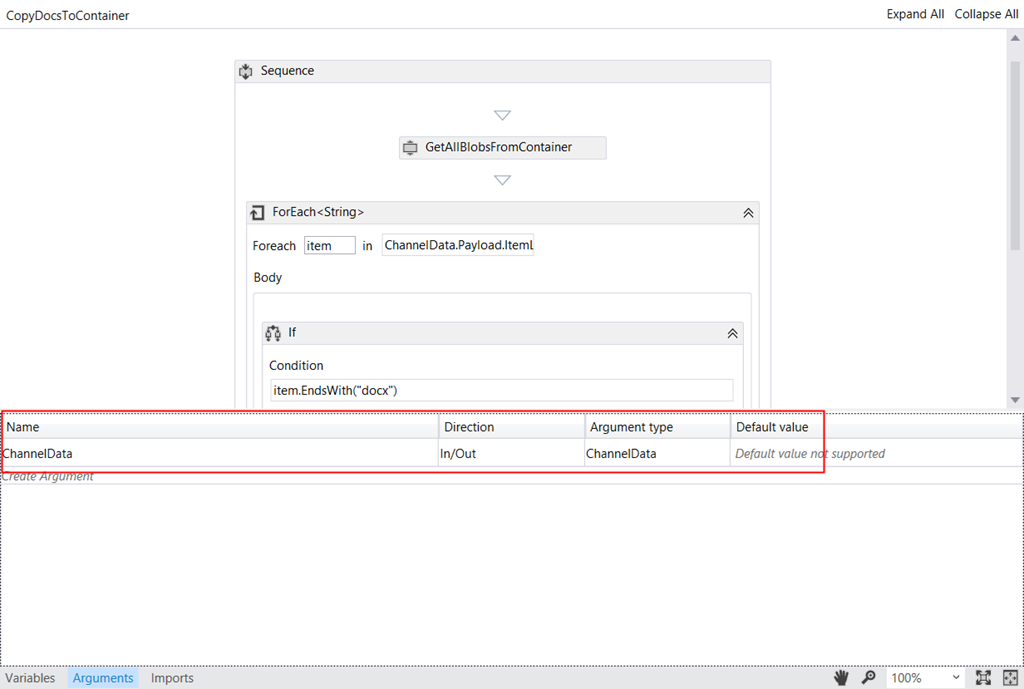

- Next, go to Arguments tab and specify

ChannelDataas an argument to workflow.

Assign values to the various activities by navigating to their properties.

GetAllBlobsFromContainer

ForEachActivity

IfActivity

CopyBlobToContainer

To prove that you can load workflows at runtime, I took the code of the xaml file of

CopyDocsToContaineractivity and pasted it as text in CopyDocsToContainer.txt file. What this means is that as long as the workflow host has the binaries of the custom code activities, it is independent of which workflows are running on it.The

TestApplicationproject is a console application that sends a single message to the queue our worker is listening on.Now, let’s build the Workflow Host. Open the WorkerRole.cs file and read on.

Once a message is received from the queue, the workflow xaml is read from the text file (ideally from database). The following code creates a new instance of

WorkflowApplication. Note that we have also passedChannelDataobject as argument to workflow.

var workflowApplication =

new WorkflowApplication(

Routines.CreateWorkflowActivityFromXaml(workflowXaml, this.GetType().Assembly),

new Dictionary<string, object> { { "ChannelData", channelData } });

- Next, we apply the various settings to our workflow host which mainly include setting up the instance store and attaching event handlers to the various events. The most important event handler here is the

PersistableIdleevent. This event gets invoked when an activity bookmarks its state. At this point we need to unload the workflow and add a message to the queue that the workflow host is listening on with the updated ChannelData object so that when the bookmark resumes we can pass the updated information back to the workflow.

//// Setup workflow execution environment.

//// 1\. Make the workflow synchronous

workflowApplication.SynchronizationContext = new SynchronousSynchronizationContext();

//// 2\. Initialize instance store with instance identifier.

this.repository = new AzureTableStorageRepository<InstanceData>(

"instanceStore",

CloudConfigurationManager.GetSetting("WorkflowStorage"));

this.repository.CreateStorageObjectAndSetExecutionContext();

var instanceStore = new UnstructuredStorageInstanceStore(

this.repository,

workflowId,

this.AddBookmarkMessage);

//// 3\. Assign this instance store to WFA

workflowApplication.InstanceStore = instanceStore;

//// 4\. Handle persistable idle to remove application from memory.

//// Also, at this point we need to add message to host queue to add message signaling that bookmark has been added.

workflowApplication.PersistableIdle = persistableIdleEventArgument =>

{

//// Check whether the application is unloading because of bookmarks.

if (persistableIdleEventArgument.Bookmarks.Any())

{

Trace.Write(

Routines.FormatStringInvariantCulture(

"Application Instance {0} is going to save state for bookmark {1}",

persistableIdleEventArgument.InstanceId,

persistableIdleEventArgument.Bookmarks.Last().BookmarkName));

}

return PersistableIdleAction.Unload;

};

- The

AddBookmarkMessagemethod is responsible for adding bookmark message to the queue. Note thatWorkflowIdentifier(necessary to identify the workflow instance) remains the same and an additional flagIsBookmarkis used to differentiate between a new workflow and a bookmarked workflow.

private void AddBookmarkMessage(Guid workflowId)

{

var hostQueueMessage = new HostQueueMessage

{

IsBookmark = true,

WorkflowIdentifier = workflowId,

PersistentPayload = this.arrivedMessage.PersistentPayload,

ItemList = this.arrivedMessage.ItemList

};

var message = new BrokeredMessage(hostQueueMessage);

message.Properties.Add("workflowName", "CopyDocsToContainer");

this.Client.Send(message);

}

- If the workflow is bookmarked, we follow a similar methodology of composing a workflow as we followed for composing a new one, but use

ResumeBookmarkfunction to resume the execution of the workflow.

//// Prepare a new workflow instance as we need to resume bookmark.

var bookmarkedWorkflowApplication =

new WorkflowApplication(

Routines.CreateWorkflowActivityFromXaml(

workflowXaml,

this.GetType().Assembly));

this.SetupWorkflowEnvironment(

bookmarkedWorkflowApplication,

channelData.Payload.WorkflowIdentifier);

//// 9\. Resume bookmark and supply input as is from channel data.

bookmarkedWorkflowApplication.Load(channelData.Payload.WorkflowIdentifier);

//// 9.1\. If workflow got successfully completed, remove the host message.

if (BookmarkResumptionResult.Success

== bookmarkedWorkflowApplication.ResumeBookmark(

bookmarkedWorkflowApplication.GetBookmarks().Single().BookmarkName,

channelData,

TimeSpan.FromDays(7)))

{

Trace.Write(

Routines.FormatStringInvariantCulture("Bookmark successfully resumed."));

this.resetEvent.WaitOne();

this.Client.Complete(receivedMessage.LockToken);

return;

}

This is it. We have everything we need. Just supply the necessary connection strings in the configuration files of the TestApplication and the WorkflowOnAzure cloud service and put some files in the source container.

Output

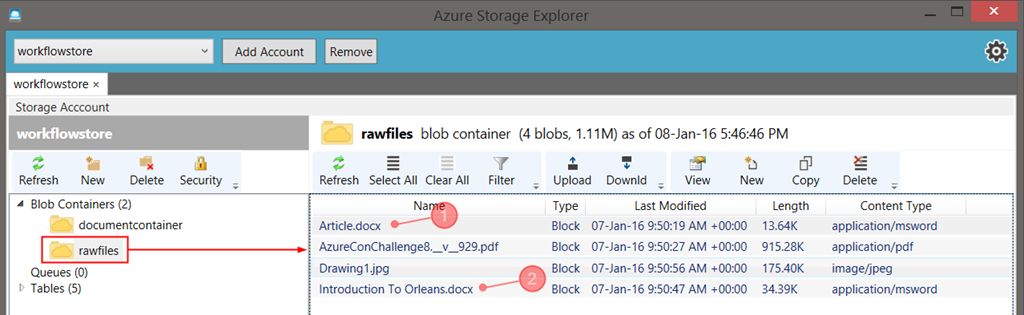

I put a few files in my source container, including some document files.

Ran the workflow application and…

Cool!! Isn’t it. I hope you get to use it in your projects. Code Well!! See you soon…er :-)

Did you enjoy reading this article? I can notify you the next time I publish on this blog... ✍