I recently completed the migration of my blog to a brand new platform. I primarily went through the upgrade process to remove dependency on Windows Live Writer to author content and to trim down the large number of Azure services that I was using to keep the blog ticking. Of course, I wanted to improve the response time and keep my focus on writing posts and not maintaining the code base. Thus, the switch to static website generators…

What Are Static Website Generators?

Simply put, static website generators take your content, which is typically stored in flat files rather than databases, apply it against layouts or templates and generate a structure of purely static HTML files that are ready to be delivered to the users. Since there is no server-side script running on the host, there is no processing time involved at all to respond to requests. Several large corporations such as Google (Year in Search), Nest and MailChimp use static website generators to deliver content. Currently, there are more than 100 static website generators that you can choose from. Most notably, the Jekyll generator that powers the US healthcare website Healthcare.gov and the Obama presidential campaign website.

Of course, the static website approach has drawbacks. The biggest one being that the content can not interact with the users. Any personalization and interactivity has to run on the client side, which is restricted. There is no admin interface that you can use to author content such as those available in most of the modern-day CMS such as Umbraco. However, this blog or most websites on the internet serve the only purpose of displaying information to the user and therefore do not need a server-side code. I prefer staying within the realms of Visual Studio as much as possible and therefore, the admin interface is not a show stopper issue for me.

This Blog Runs on Hugo

There are several generators to choose from and most of the bloggers stick with Jekyll and Middleman for good reasons. I chose Hugo primarily because it can generate websites at lightning speeds and because it is incredibly simple to set up. Unlike Ruby and Node.js which require setting up the development environment, Hugo only requires you to copy the binaries to a location. If you want to update Hugo, just copy the new binaries at the location. There is no parallel to speed of generating websites with Hugo. The content generation speed is essential during development, when you have to generate the site multiple times, and when staging the site before pushing the content to production. The content is only growing to increase in size later and with some foresight you can avoid sitting idle, twiddling your thumbs and waiting for your site to get generated to see your content go live.

The biggest drawback of Hugo is that you can’t hack into the asset pipeline to include tools such as Grunt or Gulp. All you have is a static folder which contains scripts and stylesheets that you can use in your website. You can create a build script that includes Hugo as part of build process to use features of Sass, ES etc.

However, Hugo has no dependencies and the best content model. You can put content in the predefined folder format and Hugo will parse it as sections and as entries in those sections. Features such as live-reload reduce the development time and are a joy to work with.

The New Architecture

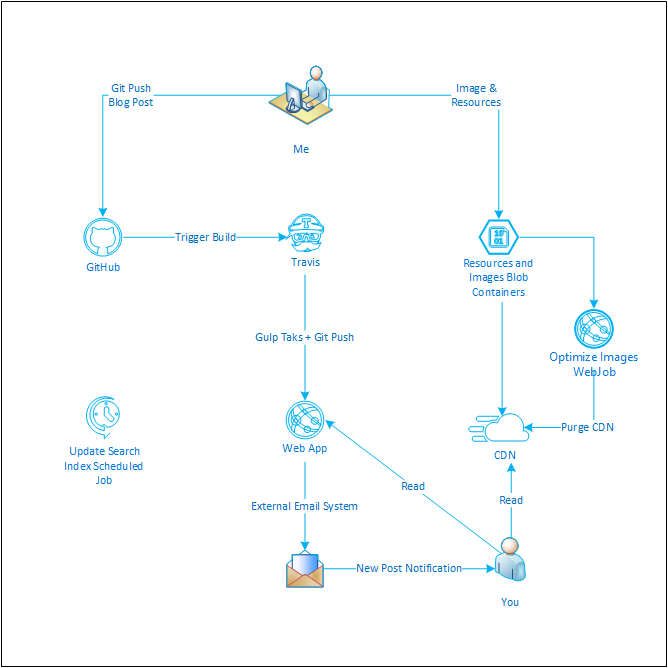

After the revamp, the new architecture is as follows:

You will notice that I use a far fewer components and that I have included Travis CI and Azure CDN in the delivery pipeline.

Travis CI is a third-party hosted CI and CD service that is free for open source GitHub projects. Travis is highly customizable. You can hack into the build pipeline by supplying scripts to execute during the various stages of the build process.

Once Travis CI has been activated for a given repository, GitHub will notify it whenever new commits are pushed to that repository or when a pull request is submitted. It can also be configured to only run for specific branches, or branches whose names match a specific pattern. Travis CI will then check out the relevant branch and run the commands specified in .travis.yml, which usually build the software and run any automated tests. When that process has completed, Travis notifies the developers in the way it has been configured to do so, such as, by sending an email containing the test results.

The new design uses a couple of Azure components such as WebApps, Scheduled Jobs, Web Jobs, Azure CDN and a couple of external dependencies. You must already be familiar with the Azure Services (if not, subscribe). Next, let’s take a look at the working of the system.

The Process

I write my blogs in Markdown and save the supporting images in a local folder which is mapped to OneDrive. I then sync the images to Azure Blob Storage using Cloudberry. An Azure CDN instance picks up the images and resources from the blob storage and pushes them to CDN PoP (points of presence) (see how). A WebJob detects that images have been uploaded to the storage account (see how). The WebJob then downloads those images, optimizes them, pushes them back to the storage and purges the appropriate CDN endpoint so that the optimized content becomes available for delivery by the CDN.

Next, I push my blog post to GitHub from where Travis is notified of the changes. I use a custom script to git push only the new or updated files to my Azure WebApp. This saves me from the pain of deploying everything to my WebApp on every single push. Also, Hugo does not, by default, build the posts that are marked as draft, which helps me push my in-progress blog posts to GitHub without affecting the existing deployment. Later on, when I am ready, I just need to set the draft property of the post to false to make the post public.

I have integrated a third-party mailing system to the blog that detects the changes in the RSS feed of this blog and sends an email to my subscribers. I took great pains to make sure that the data of my subscribers stay safe. I ❤️ my subscribers and therefore, I pay 💰 MailerLite to keep my subscriber list safe and to keep my subscribers happy.

The Code

As always, the code is available for you to use in your projects or blogs for FREE. Do send me a note of appreciation if it did help you.

Improving Efficiency

Several factors make this blog efficient at delivery:

- No use of server-side script.

- Optimization of all images to reduce delivery payload.

- Integration of Gulp to build pipeline to compress generated HTML and scripts.

- Delivery of resources through Azure CDN.

- Keeping the WebApp always loaded through Always On setting.

- Replacing Google Analytics (heavy script) with Heap Analytics (light and more fun).

Conclusion

This blog survives on feedback. People report issues to me all the time, and that’s what makes it better. Do subscribe and let me know your opinion.

Even more important for me is to see that you set up your own blog and curate it. If you don’t want to go through the hassle of hosting one of your own blogging platforms, start with a free hosted platform out of the several ones you can choose from, and post whatever comes to your mind. Not only will it keep you happy, it will give you a brand name of your own. Take care!

Did you enjoy reading this article? I can notify you the next time I publish on this blog... ✍