In this series

This is the final article in this series of building MR IoT applications using the MSF Process Model. If you have landed on this post directly, I recommend that you go through the previous posts.

- Part 1: An overview of the MSF and the Envisioning and Planning phases of delivering the solution.

- Part 2: The development and deployment of the backend of the solution.

By going through the delivery steps in this series, you must have realized how the objective of MSF is not to introduce a prescriptive methodology for delivery but to provide a framework that is flexible, scalable, and agnostic of technology. In the previous post, we provisioned the backend systems for the IoT project. We also created a simulated device and a WebAPI that surfaces the data generated by the simulated device.

In this article, we will build and deploy the MR application on an actual device while discussing the phases of MSF in parallel. By the end of this article, you will have the confidence and knowledge to deliver a greenfield MR and IoT engagement starting from inception to deployment.

Mixed Reality Project Setup

To create Mixed Reality data visualization application, follow these steps.

- Start a new instance of Unity 3D.

- Create a new 3D project by providing a project name, folder location, and other details.

- Click on Create Project.

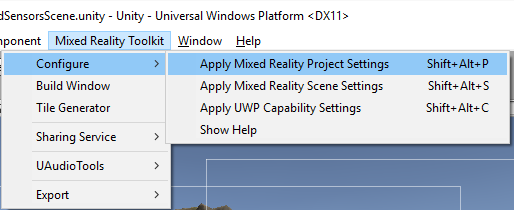

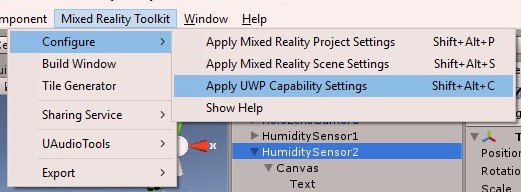

Once you have created a blank project, you would need to import the Mixed Reality Toolkit package in Unity. You can follow the steps mentioned here to bring the package in your Unity editor. After adding the toolkit, you would be able to see the Mixed Reality Toolkit option in the main menu of the editor. To prepare the default scene to load correctly in HoloLens, select the option Mixed Reality Toolkit > Configure > Apply Mixed Reality Project Settings.

Preparing the Scene

Remove the main camera from the scene and add the HoloLens camera named HoloLensCamera to the scene which is located inside the Asset folder at HoloToolkit\Input\Prefabs.

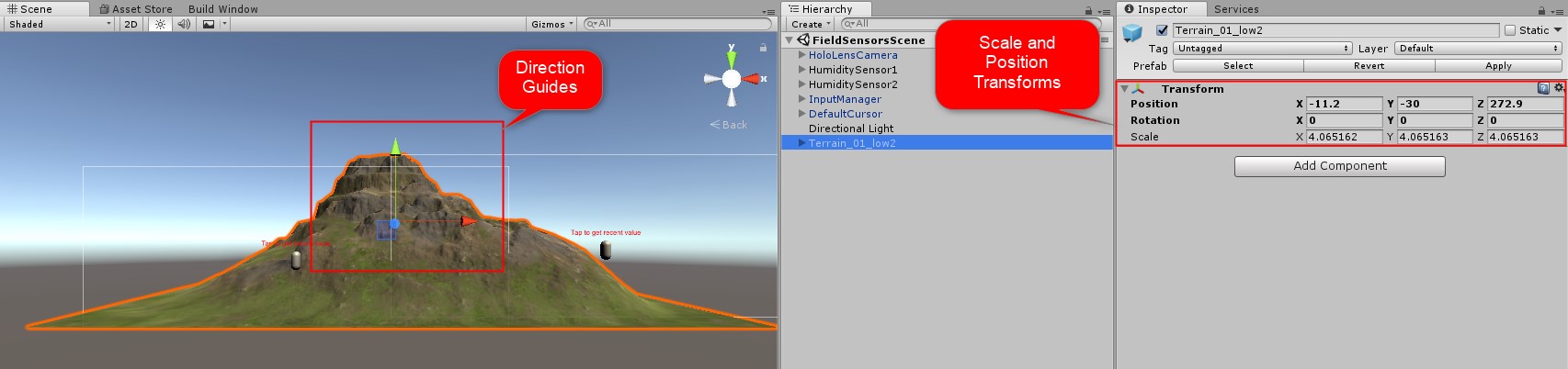

After you have added the camera, search the Unity Asset store for a landscape asset or import the landscape asset that you previously created to the scene. Here are the steps to download any asset from the Unity Store and make them available to your application. After the import, you might need to change the scale and the position of your asset to bring it in your field of view. Use the arrow guides and the scale settings to adjust the position of your asset.

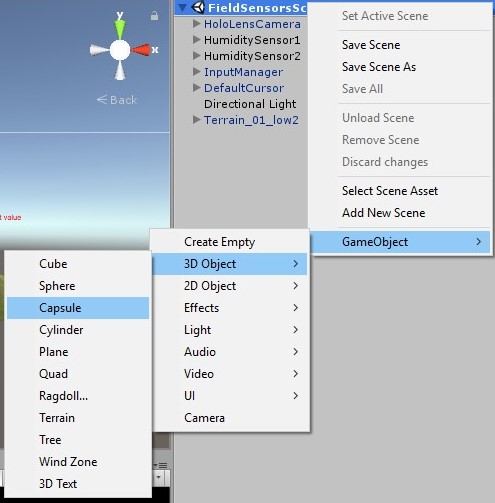

You will need to add a couple of more assets to the scene to complete the setup. Add two 3D Capsule game objects to the scene by right-clicking the scene and selecting GameObject > 3D Object > Capsule.

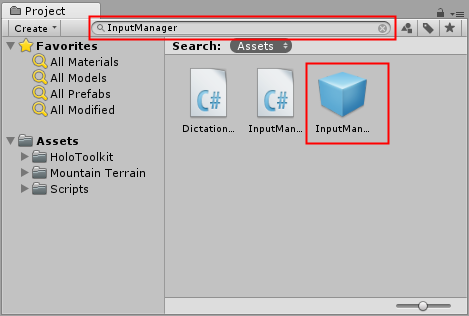

Rename the capsule objects to HumiditySensor1 and HumiditySensor2 respectively. Next, add a cursor to the scene and to give the user the ability to select an object by air-tapping on it, add the InputManager prefab and the DefaultCursor prefab to the scene. These assets are located inside the HoloToolkit folder, and you can use the search control to get to these assets quickly.

After you are done adding the assets, save the scene by navigating to File > Save Scene and providing a name to the scene, in my case I named it FieldSensorScene.

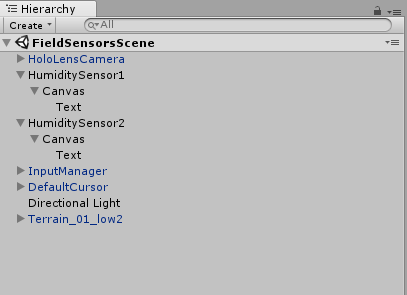

The complete object hierarchy after you have added all the assets will look like the following.

Adding Colliders

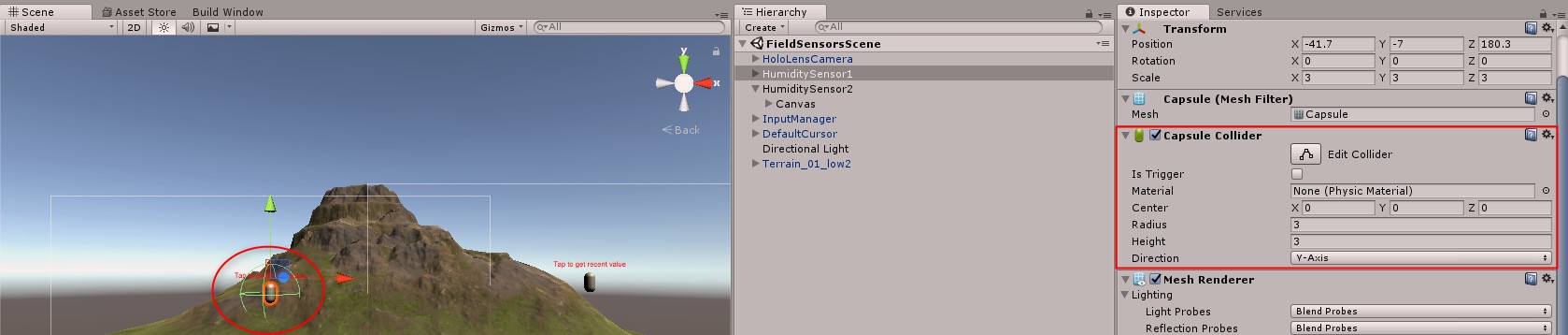

For the gaze to work, it is essential to identify the gazeable object. You can attach various colliders such as Box Collider to objects which are the base for collision primitives in Unity. In our sample, we will attach a capsule collider to the sensor assets that we added to the scene. Repeat the following steps for both the capsules that we added to the scene.

- Select the HumiditySensor1 asset in the object hierarchy.

- In the Inspector window, click on Add Component button.

- Select Physics > Capsule Collider.

- Set the radius and height of the collider so that it encapsulates the entire capsule.

Add Tag to Each Sensor Asset

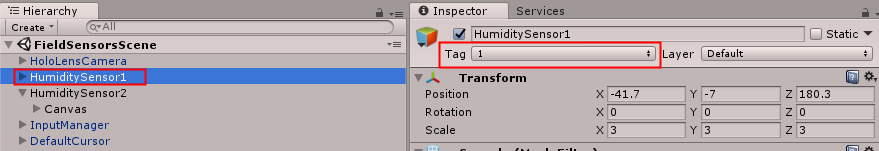

As you must have noticed, we need to send a sensor id parameter to the WebAPI to get the data corresponding to the device. So, we must identify for which sensor we are receiving what data. To do that we will add a tag mapped to each sensor.

Create a tag for each sensor from the Inspector window and map them to the appropriate sensor. Below is a screenshot that shows one of the mappings.

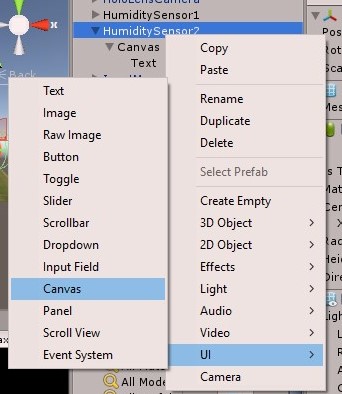

Text Display

Add a Canvas control as a child of the sensor assets by right-clicking the sensor asset in the Object Hierarchy window and selecting the appropriate control. Next, add a Text control as a child of the Canvas control that you just added. These controls would display the sensor data when triggered by a user gesture.

Connecting the MR App to Azure

To allow the application to connect to interact with external web requests, we would need to enable access to InternetClient. To do that click on the Mixed Reality Toolkit menu and then select Configure > Apply UWP Capability Settings.

From the following dialog select InternetClient and close the dialog.

Getting the Data

There are several mechanisms available for retrieving data from a WebAPI. In this sample, we will use the WWW class to communicate with our backend services, and once the response gets downloaded, we will deserialize it to the HumidityRecord model using Newtonsoft JSON serializer library.

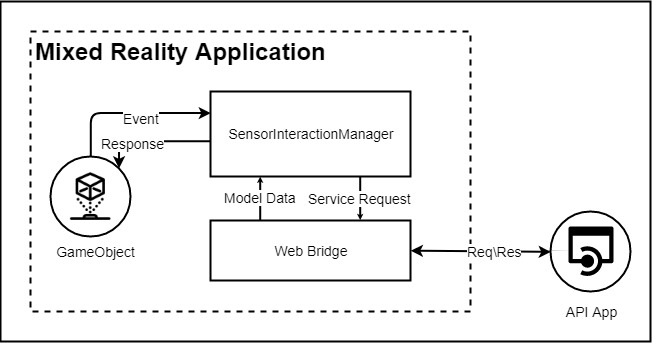

The following diagram illustrates the scripts SensorInteractionManager and WebBridge that we will build and attach to the Game Object and how they interact with the Game Object and the API app to get data.

Create a folder named Scripts in the Assets folder. Add a C# script named WebBridge to the folder. Use the following code to populate the WebBridge class which will be used for making the request.

public class WebBridge

{

public HumidityRecord GetHumidityRecord(string sensorId)

{

var www = new WWW("https://azurewebapphostname/api/1?r=" + Random.Range(0, 9999));

WaitForSeconds w;

while (!www.isDone)

w = new WaitForSeconds(0.1f);

var sensorData = www.text;

var humidityRecord = JsonConvert.DeserializeObject<HumidityRecord>(sensorData);

return humidityRecord;

}

}

There are two ways in which this script can be added to the application.

- Drag and drop this script to the root of the Hierarchy so that it is available globally to all the scripts attached to any objects.

- Drag and drop this script to the appropriate objects so that it is available only to the scripts attached to these objects.

All the components in our application do not need to access the web. Therefore, I will use the second approach to make the script locally available to the scripts attached to HumiditySensor objects.

Handling Gestures

Handling gestures using Mixed Reality Toolkit helpers is quite easy. You need to implement the right interface, which in our case is the IInputClickHandler interface and handle the appropriate event, which for us is OnInputClicked. This handler will get invoked when the user Air Taps the hologram.

Create another script named SensorInteractionManager in the Scripts folder. Add the following code in the class to make an object respond to Air Tap events.

public class SensorInteractionManager : MonoBehaviour, IInputClickHandler

{

public void OnInputClicked(InputClickedEventData eventData)

{

var textControl = this.gameObject.GetComponentInChildren<Text>();

var sensorNumber = this.gameObject.tag;

var data = new WebBridge().GetHumidityRecord(sensorNumber);

textControl.text = "Reported value " + data.Value + " @ " + data.TimestampUtc.ToString("g");

}

}

The code in this class is quite straightforward. The first line of code inside the OnInputClicked handler grabs a reference to the Text control which we added as a child to the Canvas control in the Sensor Game Object. Subsequently, it gets the tag of the sensor object and makes a call to the external WebAPI to get the last recorded value that the actual sensor generated. Finally, it modifies the text property of the control to render the updated text.

Attach this script to both the Sensor Game Objects so that the click event generated by these objects can be handled by this script.

Build the Project

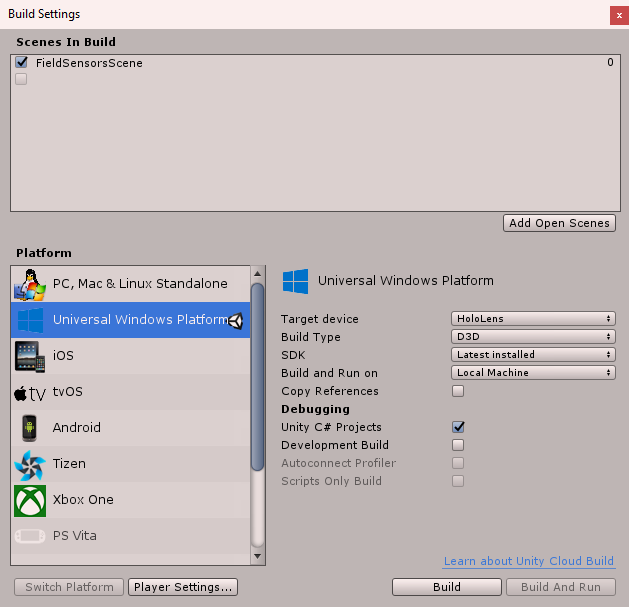

After our project setup is done, we can build and test the application to test whether everything is working fine. Follow the steps mentioned here to build and test the application. From the main menu, go to File > Build Settings and verify whether the following settings have been applied.

- Target Device: HoloLens

- Platform: Universal Windows Platform

- UWP Build Type: D3D

- Build and Run On: Local Machine

- SDK: Latest\Universal 10

- Unity C# Projects: Selected

To add your main scene to the build by clicking on the Add Open Scenes button.

In the Build Settings window, click on the Build button.

- Create a new folder named App and single click on it.

- Press Select Folder.

After the build process completes, you will find a Visual Studio solution generated in the folder. Use Visual Studio to open this solution file.

- Change build platform target to x86.

- Select HoloLens Emulator from the list of run options.

- Press F5 to debug or press Ctrl + F5 to launch the application without attaching the debugger.

Deploying the Application

Several approaches can be used to deploy the MR application to target devices. Here is an outline of the various approaches that can be used.

Using Visual Studio

After launching the Unity generated solution in Visual Studio, you can change the run option in Visual Studio from HoloLens emulator to remote device and have the solution deployed to the attached HoloLens device.

Using Mixed Reality Toolkit

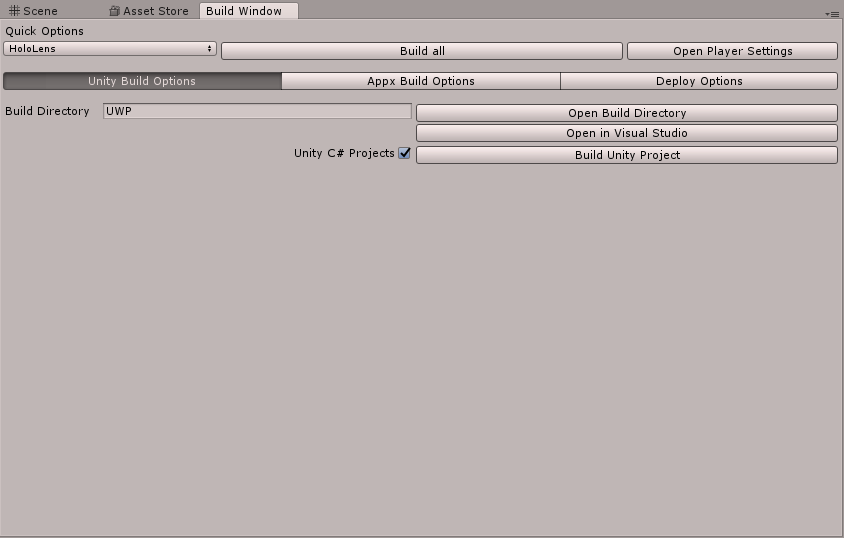

The MR Toolkit Build Window, which can be accessed at Mixed Reality Toolkit > Build Window , can be used for building the application, creating a package, installing the package on the device or emulator in a single click.

Unity Build Window This dialog window also provides several nifty shortcuts such as Opening the Device Portal, Launching the App, Viewing the Log File and Uninstalling application.

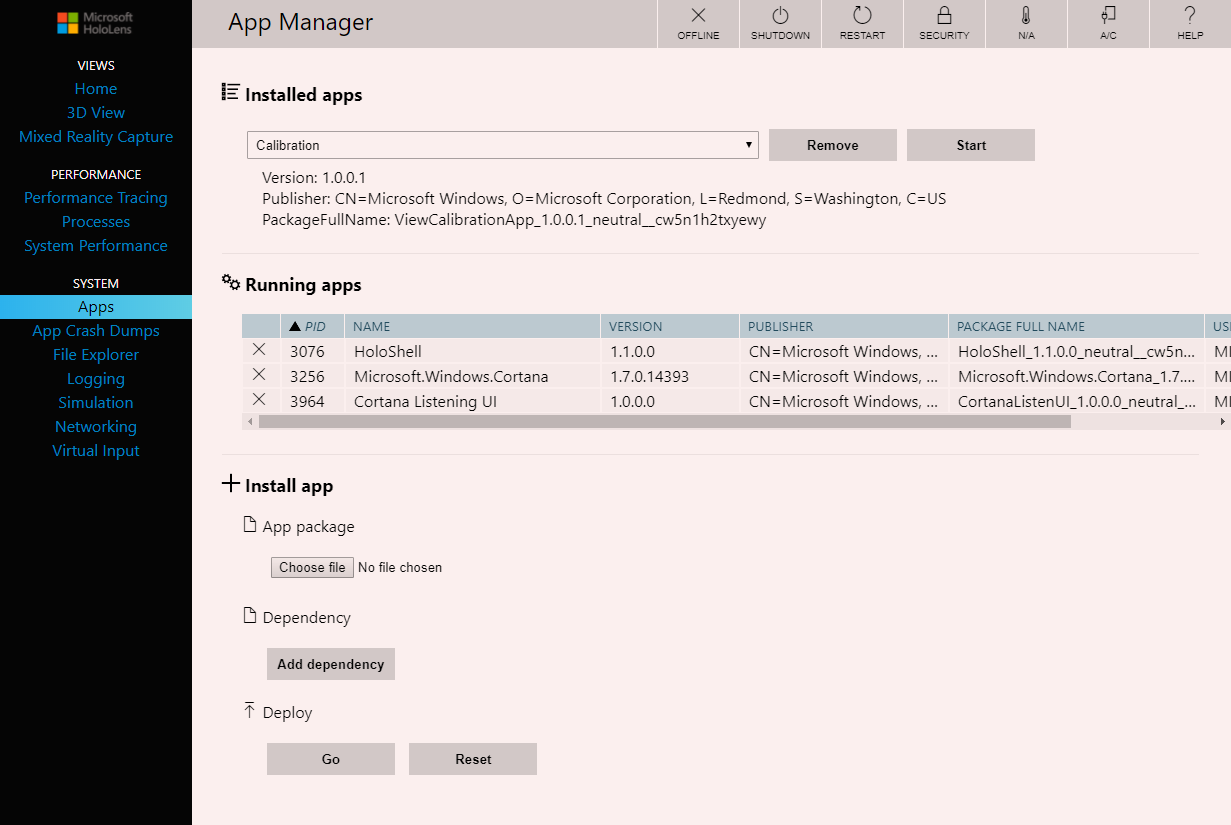

Using the Device Portal

The emulator and the device have an associated device portal which makes it easy to manage the device. You can read more about the device portal and how to access it here. In the portal navigate to System > Apps.

Here you will find a list of installed apps and running apps which you can manipulate. You can also upload new packages from this window.

HoloLens Device Portal Using Unity Remoting

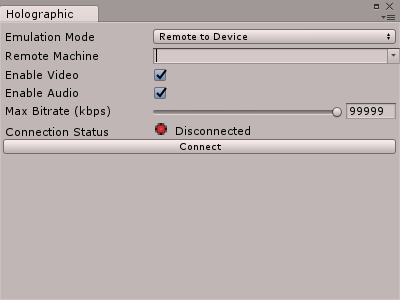

This mechanism of deployment works by setting up a connection between Unity and the HoloLens device. Using Unity remoting, you do not need to build the application or launch it from Visual Studio. Unity remoting allows you to run your application directly from the Unity editor which involves installing the remoting app from Windows Store on the HoloLens device and connecting the device through Unity settings.

In Unity navigate to Windows > Holographic Emulation and provide the connection details that are available from the application that you installed on the HoloLens.

Unity Remoting Window You can read more about Unity Remoting here.

The Project in Action

Start all the services including the simulator and deploy the application on the emulator or wear your HoloLens device and launch the application. Try air tapping the sensors to view their last recorded values. Here is a short video that demonstrates how it works.

Stabilization

The primary goal of the Stabilization phase is to test the application to find its adherence to the acceptance criteria. By the end of this phase, the team releases a feature-complete build (in our case the app package) that can be deployed to production. The testing carried out during this phase is carried out in more realistic environmental conditions. In Mixed Reality applications, the application needs to be tested in two distinct ways: Application testing, and Environmental testing.

Application Testing

This format of testing is similar to the testing carried out in traditional software applications. Application Testing includes testing the application for performance, security, user load, and functionality among other factors. This testing primarily ensures that the backend systems of the MR application are working as per expectations and the interactions configured in the MR app are as per specification.

Environmental Testing

In MR applications, the surrounding environment plays a pivotal role in driving the experience. Some of the factors that can affect the performance of an MR application are described below.

Lighting

Dim lights and bright lights can make a difference in the experience of the application. Therefore, testing MR applications in various lighting conditions is essential.

Space and Surfaces

MR applications can deviate from the desired behavior in constrained or open areas. If the hologram supports placement on a surface, then a difference in the opacity of surfaces can also cause issues with the application.

Noise

High levels of ambient sounds can cause interference with voice commands. Therefore, an application that supports vocal inputs should be tested with different levels of ambient noises.

Angle and Distance Perspectives

Holograms occupy coordinates in space, and they can be viewed from various perspectives. Therefore, a hologram should be tested from multiple viewing angles to make sure that its design is correct and as per specifications. The same case holds true for distances as well. The holograms should stick to their desired appearance when the user walks close or away from the holograms.

AV Stimulus

Holograms are objects in coordinate space, and therefore user might place the hologram at a specific place and not be able to locate it later. The MR app should have audio and\or visual stimulus available that helps the user navigate to the hologram that the user was interacting with.

Conclusion

In this series, we learned how we could build an enterprise MR IoT Application using MSF principles. The phases of MSF can be iterated to deliver the project in an Agile manner or be modeled to fit Waterfall model and so on. To extend this application, you can try adding voice commands to it, and adding placement capabilities to the model so that it can be placed on top of a stable surface. You can also try adding another type of sensor to the model and make the model proactively render data in case the telemetry values indicate an anomaly.

Did you enjoy reading this article? I can notify you the next time I publish on this blog... ✍